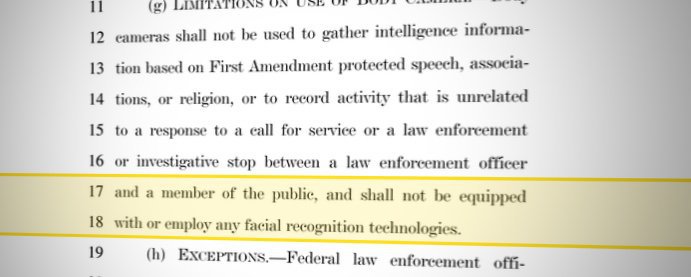

Citing fears about massive errors and invasion of privacy, 85 civil rights organizations on January 15th, sending letters imploring Amazon, Google, and Microsoft to end sales of facial recognition technology to government agencies and take a closer look at the potential for abuse.

In recent months Google and Microsoft have made public statements emphasizing the need for governments and corporations to thoughtfully and responsibly develop and deploy the new technologies. Amazon, however, has taken a more passive approach when dealing with the potential for abuse, marketing to Immigration and Customs Enforcement, as well as local police departments. Company shareholders - representing $1.32 billion in assets - recently filed a resolution with Amazon to express concerns, an issue that Amazon will have to address but upon which it has not yet commented.

The coalition sending the letters - comprised of immigrant, religious, and socially-active organizations - is urging the three companies to be cognizant of their potential role perpetuating racially-charged law enforcement practices. The coalition’s letters do not suggest that research into the technology shouldn’t be continued, but urge closer analysis of the potential for harm.

The market for facial recognition technology as a whole is expected to double in the next four years to an estimated $8 billion. With the potential for applications in advertising, education, healthcare, and personal security, the development of the technology likely won’t slow, contributing to the urgency of the letter writers’ request for careful study.

This week’s letters aren’t the first time these companies have heard complaints about the marketing and use of their facial recognition software - a growing part of their businesses. In July, the American Civil Liberties Union, which spearheaded the letter-writing campaign, pointed out that Amazon’s Rekognition’s software had numerous risks. The civil rights group used it to compare members of Congress with a collection of thousands of mugshots. Amazon’s system returned 28 false matches, demonstrating particular inaccuracies when examining photos of people of color. The same issues has been cited previously by academic researchers, and already causing alarm to people interested in protecting communities historically targeted by the government and law enforcement.

“I think the history of the 20th century is a long history of the use of surveillance tools of the day,” said Shankar Narayan of the Technology and Justice Project at ACLU of Washington, one of the letters’ signatories, in an interview with MuckRock.

Narayan cited dossiers compiled by the FBI and other law enforcement agencies about members of the civil rights movement in the ‘50s and ‘60s and the use of automated license plate readers by New York City to track the Muslim community after the September 11th, 2001 attacks.

“So we really see this as being part of that long history except that face surveillance really is game changing, because it can be applied by the government to any existing video. The government doesn’t have to determine ahead of time who it’s going to follow around. And because there’s so much video in public spaces, it means the government’s ability to know who’s where when is supercharged and that means that chilling effect, particularly for vulnerable communities, is always there,” Narayan said.

Similar worries are expressed by all the groups who signed the letters. Several wrote that they believe it is best to express these concerns early, while facial recognition is still in the nascent stages of development.

“[W]e are calling on some of the biggest corporations in the world to commit to be on the right side of history and not sell this harmful technology to law enforcement,” Steven Renderos, Senior Campaign Director at the Center for Media Justice wrote in an email to MuckRock. “Their leadership could help us reverse course and pressure governments to ban the use of facial recognition.”

In the last few months, the ACLU has met with representatives from the three companies to express concerns about facial recognition software and its potential abuses. After those conversations, Microsoft released ethical principles it plans to apply to its technology. In December, Google declared that “unlike some other companies,” Google Cloud has acknowledged that there are “important technology and policy questions” that need consideration before they can offer access to “general-purpose facial recognition APIs.”

“With Amazon, the response really has been to not acknowledge any responsibility - I think that was largely the case in the meeting that we had with them - and is reflected in Jeff Bezos’s comments, which, to me, come from a place of immense privilege,” said Narayan, who sees the statements from Microsoft and Google as inadequate but still a sign of progress. “If you’ve never been on the wrong end of surveillance technology, it’s very easy to say that nothing bad is going to happen or that we should just wait until society develops an ‘immune response,’ but there’s a growing body of research that shows we’re not in that space.”

Advocates believe a serious, public discussion is imperative. In comments Amazon CEO Jeff Bezos made at an October 2018 event, he discussed his stalwart support for the Department of Defense, space commercialization, and humankind’s need to plan long term; Amazon is currently constructing a clock worth $42 million dollars to keep time for the next 10,000 years, an example of symbol-building Bezos believes will be crucial for the progress of the species.

“Technologies are always two-sided. There are ways they can be misused as well as used. The book was invented and people could write really evil books and lead bad revolutions with them … that doesn’t mean the book is bad,” Bezos said in October at the Wired25 summit. “I feel that society develops an immune response eventually to the bad uses of new technology, but it takes time.”

In the same conversation, Bezos acknowledged that part of the problem with technology these days is that it encourages “identity politics and tribalism,” reaffirming confirmation bias and reinforcing the potential for discrimination. It’s these exact concerns that many of those signing onto the letter believe are being exacerbated by the current use of facial recognition software and lack of societal conversation around facial recognition technology.

“Public safety as a rationale is not a substitute for a public policy discussion,” said Paromita Shah of The National Immigration Project of the National Lawyers Guild, which is currently suing ICE to obtain biometric documents they would not release under the Freedom of Information Act. “DHS is emerging as the nation’s federal police force, and it should not be able to amass this kind of technology without a public examination and discussion about its use, purpose, scale and design.”

Miguel Maestas, Housing and Economic Development Director of another participating group, El Centro de la Raza, told MuckRock that the need for caution is urgent.

The group’s founder and Maestas’ uncle, Roberto [Maestas] “would often evoke the concept of the beloved community, which Dr. Martin Luther King often talked about and conceptualized as a community free of poverty, racism, militarism, and violence, and said that everything we do has to be part of building the beloved community,” he said.

The organization has been active in Seattle for 47 years, providing programming and resources to the immigrant and refugee communities.

“That’s part of the foundation of our organization and drives our work, including our efforts to be part of this organizing in relation to the facial recognition technology,” Miguel Maestas said.

The letter is embedded below:

Algorithmic Control by MuckRock Foundation is licensed under a Creative Commons Attribution 4.0 International License.

Based on a work at https://www.muckrock.com/project/algorithmic-control-automated-decisionmaking-in-americas-cities-84/

Image by YO! What Happened To Peace? via Flickr and is licensed under CC BY-SA 2.0