Over the years, a number of requesters, researchers, and members of the public have asked whether we think who the requester is impacts how requests are handled. Under the law, every requester should be treated the same, but we’ve seen a number of cases where different requesters get different answers (and prices, and response times) for largely identical requests.

So we decided to analyze our data to see if one factor - predicted ethnicity based on name - swayed how requests were handled.

- Who appears to be filing FOIA requests?

- Are there differences in response rates based on perceived race/ethnicity?

- Are there differences in response rates based on jurisdiction level (federal, state, or local)?

- Limitations and thoughts on further analysis

First, some important caveats.

Predicting ethnicity and race by name is an infamously flawed and inexact exercise; a number of users we know personally, for example, were matched incorrectly. That means the data is not a good breakdown of who actually makes up our user base.

But since we’re mostly interested in how the requester’s ethnicity and race are perceived by the public records officer, rather than what they actually are, we don’t need to have correct data, just data that matches the perceptions of the people processing the request.

Second, MuckRock’s users are self selecting. While we represent a broad swath of requesters (with over 18,000 registered accounts), MuckRock tends to draw some communities - such as activists, reporters, and researchers - more than others. Having a more limited sample of records requesters might mean that a smaller subset of users who happen to write better requests, or file requests with speedier agencies, might skew the data. We go into some of those possible considerations later, and welcome other researchers who want to explore the data with other hypotheses in mind.

Those caveats aside, we found some broad reassuring consistency across how quickly requests were responded to, with one surprising exception. But first, a look at MuckRock’s pool of requesters.

Who appears to be filing FOIA requests?

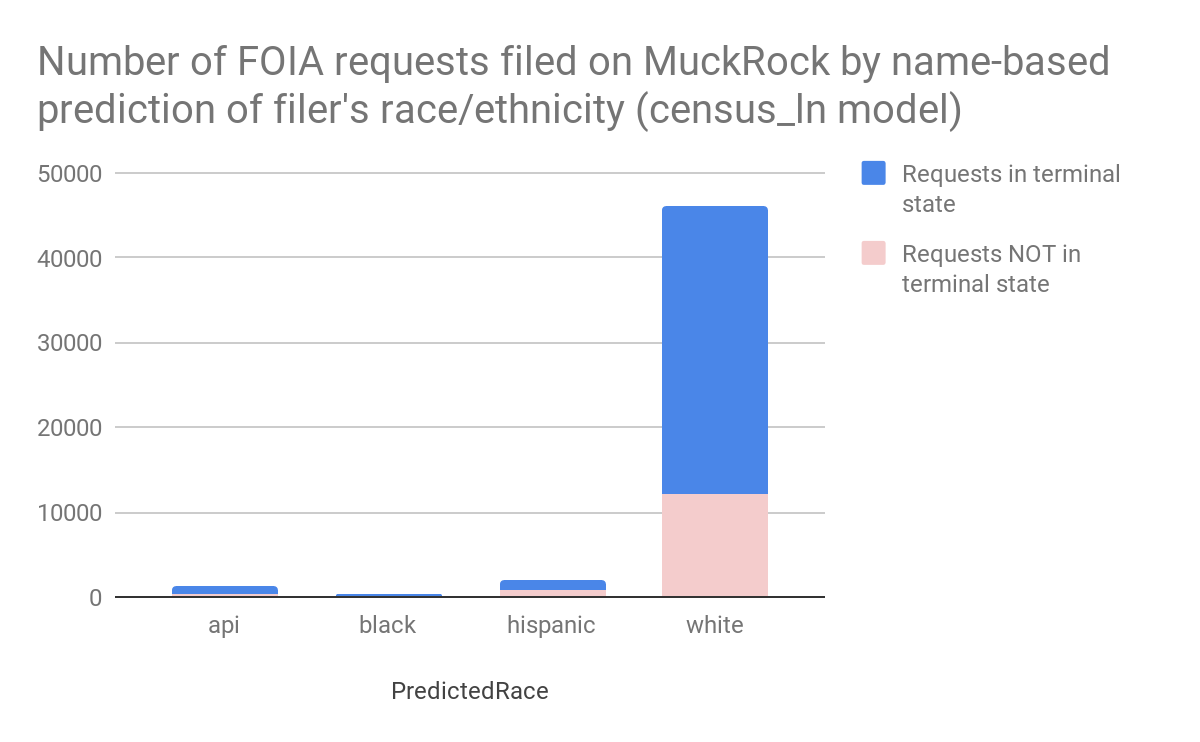

So, what are we looking at here? For starters, we pulled FOIA data from the MuckRock API and joined it with the user’s account information. User’s names, as provided, were analyzed using the ethnicolr Python package to predict users’ race/ethnicity. These counts represent the predicted race/ethnicity, not a reported one.

As of July 12th, 2018, MuckRock had facilitated 46,068 FOIA requests by filers likely to be perceived as white based on their names. That number compares to 1,964 likely Hispanic filers, 1,302 filers from Asian or Pacific Islander descent, and just 257 filers likely to be perceived as black.

| Predicted race | Count of FOIA requests through MuckRock |

| Asian or Pacific Islander | 1,302 |

| black | 257 |

| Hispanic | 1,964 |

| white | 46,068 |

Are there differences in response rates based on perceived race/ethnicity?

Sort of, but there is a lot of uncertainty around this.

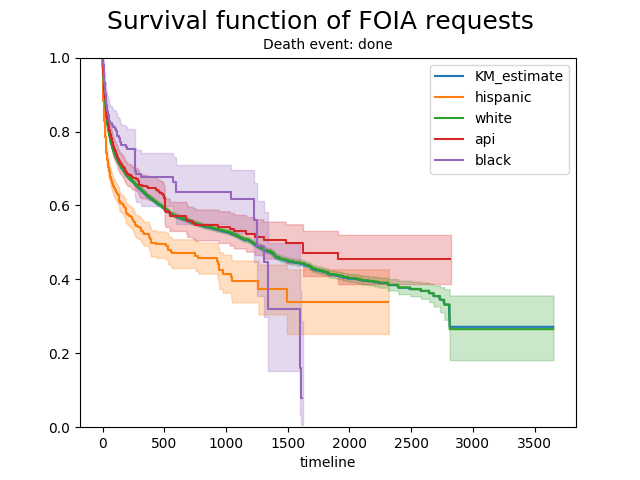

Take the following graph for example. (Find more survival curves here.)

What can be seen here is that white is essentially the same as the overall estimate. That is expected given the sizes of each group.

| Predicted race | Count done | Percent done |

| Asian or Pacific Islander | 382 | 40.86 |

| black | 74 | 35.58 |

| Hispanic | 717 | 61.92 |

| white | 14,927 | 43.94 |

The “black” group here is relatively small at just 74 FOIA requests. That’s reflected in the wide uncertainty and jagged form of the graph – twenty completed requests could account for the abrupt drop in the purple line around 1,300 days. Twenty requests getting marked as done in the “white” group wouldn’t make a noticeable difference in the green curve.

However, the Hispanic and Asian or Pacific Islander groups have more similarly shaped curves and more comparable n-sizes. These two curves are distinct with reasonable confidence. In other words, it appears that perceived race/ethnicity may impact the time it takes for a response to reach the “done” status. More specifically, the analysis showed that having a likely Hispanic name is correlated with faster time to “done” state.

Pairwise logrank tests results:

| PredictedRace_A | PredictedRace_B | significance_code | p_value |

| api | black | 0.437883 | |

| api | hispanic | *** | 0.000000 |

| api | white | 0.194233 | |

| black | hispanic | *** | 0.000005 |

| black | white | 0.163292 | |

| hispanic | white | *** | 0.000000 |

Something that we have not yet sorted out is the possibility of location as a confounding variable in the predicted race analysis. One hypothesis is that the Hispanic group has a higher percentage of FOIA requests filed with agencies that more strictly enforce response timelines, thus resulting in the group having generally faster responses. If so, predicted race could simply be functioning as a proxy variable for request location and jurisdiction. Further assessment is needed to rule that scenario out.

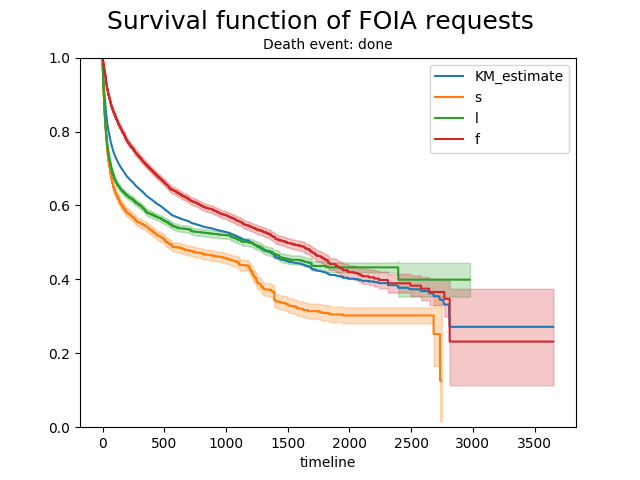

Are there differences in response rates based on jurisdiction level (federal, state, or local)?

Yes. The survival curves below show that FOIA requests at the state level are generally much quicker to get done.

To further clarify, the curves show that the median time for a state-level FOIA request to reach the “done” was 527 days. That’s compared to 1,490 days for the federal level and 1,188 days for the local level.

Limitations and thoughts on further analysis

As noted previously, there are a number of caveats to understanding this data. Other factors that could be impacting outcomes include:

-

The analysis doesn’t account for the contents of the FOIA request.

-

This analysis doesn’t take into account variance in confidence of name predictions. For example, if a name is predicted to be 42% likely to be Hispanic and 43% likely to be Asian, it would be classified as Asian in the survival analysis. This could be taken into account, but would require a more complicated analysis.

-

There are a few people that appear to use fake names or names of a company/group rather than a human name. These are relatively rare.

-

It would be worth further study to see if controlling for the jurisdiction or agency receiving the request impacts the predictive analysis.

For this study, FOIA and user data was most recently pulled on July 12th, 2018. Agency and jurisdiction data was most recently pulled on August 22nd, 2018.

If you are interested in learning more about the research methodology used, or would like sample code to extend the research in other directions, we’d love to talk: Reach us at info@muckrock.com.

Image via US National Archives Flickr