DocumentCloud’s users can already upload documents easily within the web interface. Drag-and-drop uploads are fine for a dozen documents, or even a hundred documents, but using the web interface to upload thousands of pages of documents takes a toll on DocumentCloud’s indexing engine and can cause performance delays for everyone. It’s also just inefficient to batch documents in smaller subsets, keep track of which documents were successfully uploaded and manually check for errors that might require re-uploading.

A few years ago, MuckRock won access to a massive cache of documents, which prompted us to write a batch upload script to help us upload the documents to DocumentCloud. After a three-year long lawsuit, MuckRock succeeded in forcing the CIA to release 13 million pages (1 million files) from their CREST archive.

We’re proud to present a re-written and generalized version of this script to the MuckRock and DocumentCloud community to assist users seeking to upload large (and small) collections of documents. If you’re more of a visual person, there are also video guides on how to set up and use the batch upload script on Linux, Windows and Mac OS X.

Requirements

The script contains a few requirements, namely:

- A DocumentCloud account (sign-up) that is verified (you’ll be prompted to verify when logged in).

- A system with Python and Pip installed. For Mac OS X users, you will additionally need to go to your Applications folder -> Python Folder -> and open the “Install Certificates.command” file which is needed in order for SSL to work and for this script to work correctly.

- The Batch Upload Script downloaded locally either by downloading the ZIP or installing the GitHub CLI tool and running:

gh repo clone MuckRock/dc_batch_upload - The script dependencies need to be installed by running the following in the terminal in the location of the batch upload script:

pip install -r requirements.txt - Environment variables for

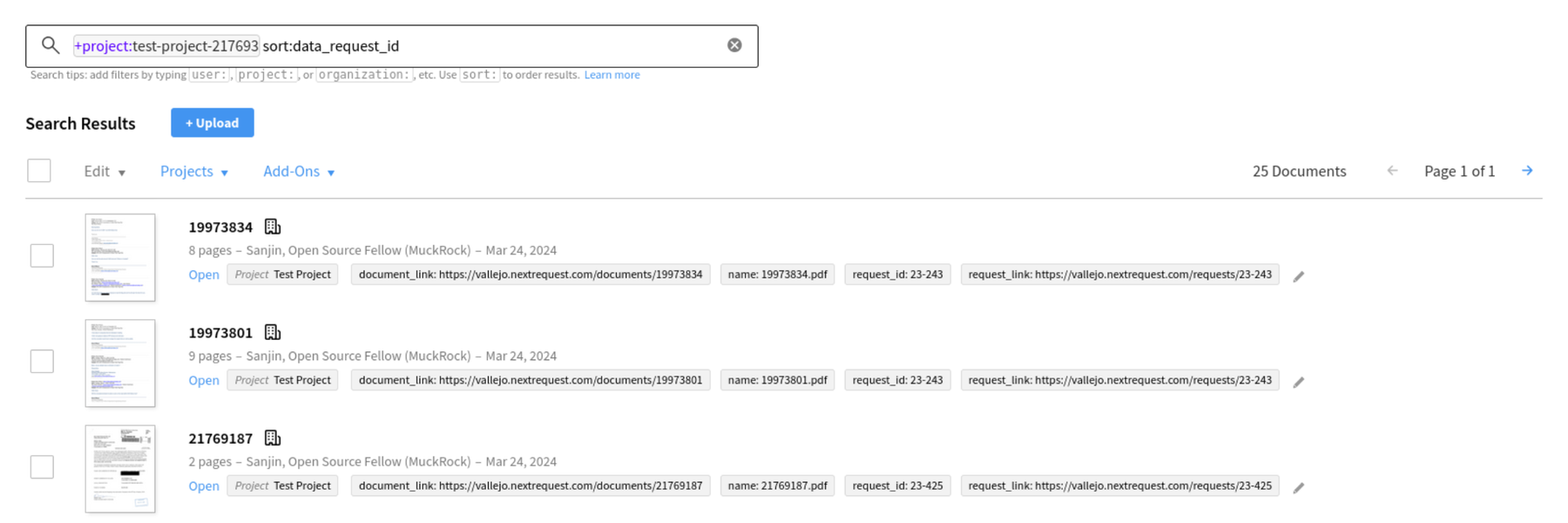

DC_USERNAMEandDC_PASSWORD, which are your DocumentCloud username and password, need to be set in your system. Instructions for how to do this on Linux, Windows and Mac OS X are available. - The project ID for the project you would like to upload these documents to must be found. When logged into DocumentCloud, when you click on a project, the project ID is the number that appears in the search bar after the name project.

- The file path to the directory of documents you are trying to upload

- A CSV file that describes the documents with at least two columns:

title, which is a human readable title for the Documents you’d like to have on DocumentCloud, andname, which is the name of the document as it is stored on your machine. Any additional columns will be uploaded as key/value pair metadata for the document. If you don’t have a CSV file handy, don’t fret as the batch upload script does provide a way to generate the CSV from a directory of documents. You would run the following, where any capitalized fields are one you modify :

python3 batch_upload.py -p PROJECT_ID --path PATH --csv CSV_NAME --generate_csv

You would then run the following once more to do the upload:

python3 batch_upload.py -p PROJECT_ID --path PATH --csv CSV_NAME

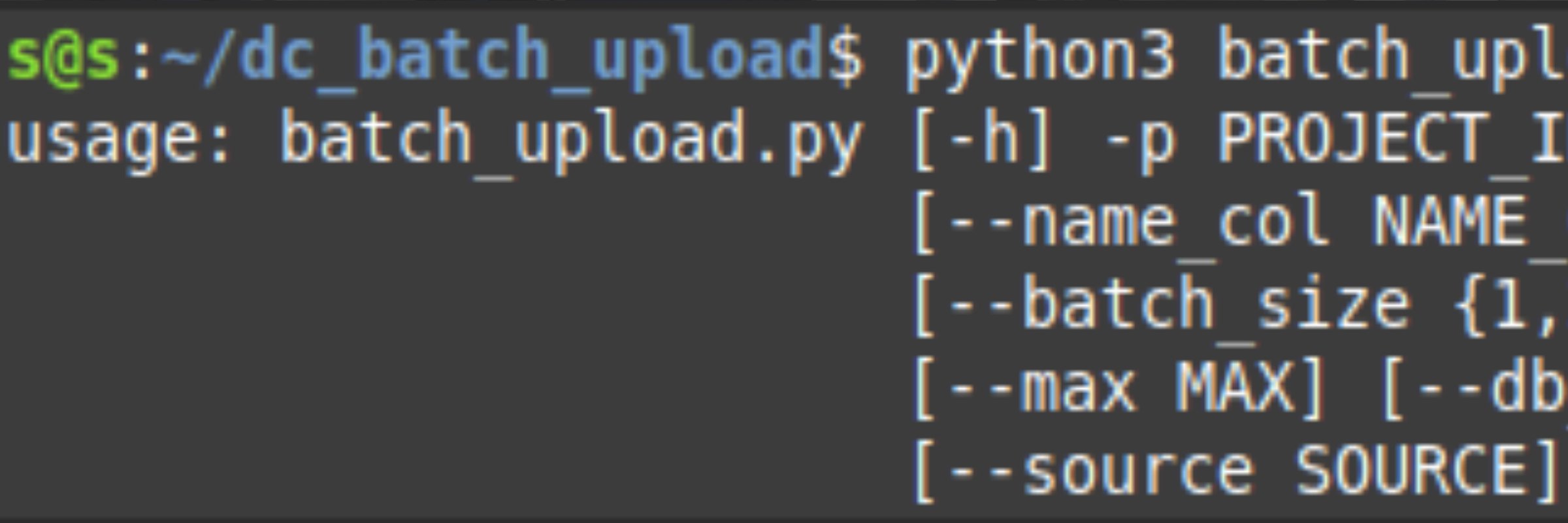

The script allows you to specify what access level should be applied to the documents using --access ACCESS_LEVEL, specify the source of the documents using --source SOURCE as well as several other configurable options. You can always pull up the help menu for the script by running python3 batch_upload.py -h

Case Studies

At DocumentCloud, our first round of Gateway Grantees have used the bulk upload script to help preserve and publicize critical document collections at risk of being lost or destroyed, including Fiquem Sabendo and Data Fixers who have used the script to archive and make publicly accessible for the first time over 1,000 documents related to Brazil’s Slave Labor Reports. The collection has also been categorized by year, making it easier to trace the investigations of the use of slave labor in Brazil across time.

Gateway Grantee Centro de Periodismo Investigativo has also used the bulk upload script to archive over 25,000 documents spread over two projects to dig into the Fiscal Control Board of Puerto Rico. Since its creation, the board has promoted a culture of secrecy, arguing that it is not subject to Puerto Rico’s constitutional right to access information. Puerto Rico’s Centro de Periodismo Investigativo (CPI) has been successfully litigating to open up the Board’s records, winning the release of over 20,000 previously secret documents. The bulk upload script automated the laborious process of manually selecting and batching the documents - received from the legal case as well as others from the bankruptcy case and the Board’s own public archive- into smaller sets and checking for processing errors, saving CPI time better spent analyzing the large collection for newsworthy deliverables.

Closing Notes

The DocumentCloud team hopes by continuing to provide new and better tools for newsrooms across the globe to upload, store, annotate, redact, analyze and publish documents, that we continue to build a healthy ecosystem for journalists and researchers alike. If you have not already tried out DocumentCloud Add-Ons, check a few out. The library is expanding daily. There are now Add-Ons to convert EML and MSG email files to PDFs (Email Conversion Add-On), import documents from Google Drive, Dropbox, Mediafire and WeTransfer (Import Documents), transcribe audio files from Google Drive, Dropbox, YouTube and more (Transcribe Audio), uncover poorly redacted text in a set of documents (Bad Redactions), classify documents based on types (SideKick), scrape documents using a scheduled web scraper (Scraper).

If you use the batch upload script and need assistance or want to share some praise, please drop in to the MuckRock Slack or email us at info@documentcloud.org, we always look forward to hearing from you. You can also subscribe to our DocumentCloud and Release Notes newsletters as well as MuckRock’s general weekly newsletter.

Header image is a screenshot of Python code from the DocumentCloud Bulk Upload library.